26 The Importance of Early Attitudes Toward Mathematics and Science (Ing & Nylund-Gibson, 2017)

This document presents a replication of the latent transition analysis (LTA) conducted by Ing and Nylund-Gibson, (2017), which investigated how students’ attitudes toward mathematics and science evolve over time and how those attitudinal trajectories relate to later academic outcomes. Using nationally representative longitudinal data from the LSAY study, we follow students from Grade 7 to Grade 12, classifying them into latent attitudinal profiles at each wave and modeling transitions between those profiles across time. This replication reproduces the authors’ manual 3-step LTA approach, including: (1) estimating an invariant unconditional model to identify latent profiles across grades; (2) using fixed logits to classify students into profiles at each wave; and (3) examining how class membership and transitions relate to distal outcomes and demographic covariates. Where applicable, we extend the original analysis by visualizing transition patterns, computing subgroup differences using z-tests, and formatting outputs for clear interpretability.

26.1 Load Packages

library(tidyverse)

library(haven)

library(glue)

library(MplusAutomation)

library(here)

library(janitor)

library(gt)

library(cowplot)

library(DiagrammeR)

library(webshot2)

library(stringr)

library(flextable)

library(officer)

library(dplyr)

library(tidyr)

library(haven)

library(psych)

library(ggrepel)

library(PNWColors)

library(multcompView)26.2 Prepare Data

lsay_data <- read_sav(here("tc_lta", "data", "Dataset_Jul9_Mplus.sav"))

# Filter to follow-up sample (target ~1824 rows)

lsay_data <- lsay_data %>% filter(RSTEMM %in% c(0, 1))

# Define survey questions

all_questions <- c(

"AB39A", "AB39H", "AB39I", "AB39K", "AB39L", "AB39M", "AB39T", "AB39U", "AB39W", "AB39X", # 7th grade

"GA32A", "GA32H", "GA32I", "GA32K", "GA32L", "GA33A", "GA33H", "GA33I", "GA33K", "GA33L", # 10th grade

"KA46A", "KA46H", "KA46I", "KA46K", "KA46L", "KA47A", "KA47H", "KA47I", "KA47K", "KA47L" # 12th grade

)

# Rename variables >8 characters

lsay_data <- lsay_data %>%

rename(

SCIG8 = ScienceG8,

SCIG11 = ScienceG11

)

# Recode 9999 to NA (missing)

lsay_data <- lsay_data %>%

mutate(across(all_of(all_questions), ~if_else(. == 9999, NA_real_, .)))

names(lsay_data) <- toupper(names(lsay_data))26.3 Descriptive Statistics

26.3.1 Create Table 1

This table provides the overall mean and standard deviation for each attitudinal item at Grades 7, 10, and 12. These values summarize general trends in the full sample and serve as a foundation for the latent class models that follow.

# Function to compute stats (count, mean, SD)

compute_stats <- function(data, question, grade, question_name) {

data %>%

summarise(

Grade = grade,

Count = sum(!is.na(.data[[question]])),

Mean = mean(.data[[question]], na.rm = TRUE),

SD = sd(.data[[question]], na.rm = TRUE)

) %>%

mutate(Question = question_name)

}

# Define question names and mappings

table_setup <- tibble(

question_code = all_questions,

grade = rep(c(7, 10, 12), each = 10),

question_name = rep(

c(

"I enjoy math",

"Math is useful in everyday problems",

"Math helps a person think logically",

"It is important to know math to get a good job",

"I will use math in many ways as an adult",

"I enjoy science",

"Science is useful in everyday problems",

"Science helps a person think logically",

"It is important to know science to get a good job",

"I will use science in many ways as an adult"

),

times = 3

)

)

# Compute stats for all questions

table1_data <- pmap_dfr(

list(table_setup$question_code, table_setup$grade, table_setup$question_name),

~compute_stats(lsay_data, ..1, ..2, ..3)

) %>%

mutate(

Mean = round(Mean, 2),

SD = round(SD, 2)

) %>%

arrange(match(Question, table_setup$question_name), Grade) %>%

select(Question, Grade, Count, Mean, SD)

# Build table

table1_gt <- table1_data %>%

gt(groupname_col = "Question") %>%

tab_header(

title = "Table 1. Descriptive Statistics for Mathematics and Science Attitudinal Survey Items Included In Analyses"

) %>%

cols_label(

Grade = "Grade",

Count = "N",

Mean = "M",

SD = "SD"

) %>%

fmt_number(

columns = c(Mean, SD),

decimals = 2

)

# Show table

table1_gt| Table 1. Descriptive Statistics for Mathematics and Science Attitudinal Survey Items Included In Analyses | |||

| Grade | N | M | SD |

|---|---|---|---|

| I enjoy math | |||

| 7 | 1882 | 0.69 | 0.46 |

| 10 | 1532 | 0.63 | 0.48 |

| 12 | 1120 | 0.57 | 0.50 |

| Math is useful in everyday problems | |||

| 7 | 1851 | 0.72 | 0.45 |

| 10 | 1520 | 0.65 | 0.48 |

| 12 | 1111 | 0.67 | 0.47 |

| Math helps a person think logically | |||

| 7 | 1847 | 0.65 | 0.48 |

| 10 | 1517 | 0.69 | 0.46 |

| 12 | 1108 | 0.71 | 0.45 |

| It is important to know math to get a good job | |||

| 7 | 1854 | 0.77 | 0.42 |

| 10 | 1517 | 0.68 | 0.47 |

| 12 | 1105 | 0.61 | 0.49 |

| I will use math in many ways as an adult | |||

| 7 | 1857 | 0.75 | 0.43 |

| 10 | 1525 | 0.65 | 0.48 |

| 12 | 1104 | 0.65 | 0.48 |

| I enjoy science | |||

| 7 | 1873 | 0.62 | 0.48 |

| 10 | 1526 | 0.59 | 0.49 |

| 12 | 1105 | 0.55 | 0.50 |

| Science is useful in everyday problems | |||

| 7 | 1840 | 0.40 | 0.49 |

| 10 | 1516 | 0.43 | 0.50 |

| 12 | 1099 | 0.48 | 0.50 |

| Science helps a person think logically | |||

| 7 | 1850 | 0.49 | 0.50 |

| 10 | 1516 | 0.53 | 0.50 |

| 12 | 1100 | 0.56 | 0.50 |

| It is important to know science to get a good job | |||

| 7 | 1857 | 0.40 | 0.49 |

| 10 | 1518 | 0.43 | 0.50 |

| 12 | 1099 | 0.38 | 0.49 |

| I will use science in many ways as an adult | |||

| 7 | 1873 | 0.46 | 0.50 |

| 10 | 1524 | 0.42 | 0.49 |

| 12 | 1103 | 0.44 | 0.50 |

Save Table 1

26.3.2 Create Table 2

This table presents model fit statistics for latent profile enumeration at Grades 7, 10, and 12. For each grade, models with 1 through 6 profiles were estimated and evaluated using the commonly used information criteria (AIC, BIC, SSA-BIC), entropy, and likelihood ratio tests (LMR, BLRT). These values help determine the most appropriate number of latent profiles to retain at each grade.

# Coerce to numeric just in case

lsay_data <- lsay_data %>%

mutate(

MATHG12 = as.numeric(MATHG12),

SCIG11 = as.numeric(SCIG11),

STEMSUP = as.numeric(STEMSUP)

)

# Build the summary table

table2_data <- tibble::tibble(

`Outcome Variable` = c(

"12th Grade Mathematics Achievement",

"11th Grade Science Achievement",

"STEM Career Attainmentᵃ"

),

N = c(

sum(lsay_data$MATHG12 != 9999 & !is.na(lsay_data$MATHG12)),

sum(lsay_data$SCIG11 != 9999 & !is.na(lsay_data$SCIG11)),

sum(lsay_data$STEMSUP != 9999 & !is.na(lsay_data$STEMSUP))

),

M = c(

round(mean(lsay_data$MATHG12[lsay_data$MATHG12 != 9999 & !is.na(lsay_data$MATHG12)]), 2),

round(mean(lsay_data$SCIG11[lsay_data$SCIG11 != 9999 & !is.na(lsay_data$SCIG11)]), 2),

round(mean(lsay_data$STEMSUP[lsay_data$STEMSUP != 9999 & !is.na(lsay_data$STEMSUP)]), 2)

),

SD = c(

round(sd(lsay_data$MATHG12[lsay_data$MATHG12 != 9999 & !is.na(lsay_data$MATHG12)]), 2),

round(sd(lsay_data$SCIG11[lsay_data$SCIG11 != 9999 & !is.na(lsay_data$SCIG11)]), 2),

NA

)

)

# Render the gt table

table2_gt <- table2_data %>%

gt() %>%

tab_header(

title = md("**Table 2. Descriptive Statistics for Distal Outcome Variables**")

) %>%

cols_label(

`Outcome Variable` = "Outcome Variable",

N = "N",

M = "M",

SD = "SD"

) %>%

sub_missing(columns = everything(), missing_text = "") %>%

tab_footnote(

footnote = "Binary indicator coded 1 = STEM occupation in mid-30s follow-up.",

locations = cells_body(rows = 3, columns = "Outcome Variable")

)

table2_gt| Table 2. Descriptive Statistics for Distal Outcome Variables | |||

| Outcome Variable | N | M | SD |

|---|---|---|---|

| 12th Grade Mathematics Achievement | 830 | 71.20 | 14.55 |

| 11th Grade Science Achievement | 1106 | 66.67 | 11.30 |

| STEM Career Attainmentᵃ1 | 1912 | 0.15 | |

| 1 Binary indicator coded 1 = STEM occupation in mid-30s follow-up. | |||

Save Table 2

26.4 Run Independent LCAs for Each Timepoint

26.4.1 Prepare Data for MPlusAutomation

To determine the appropriate number and structure of attitudinal profiles at each timepoint, we first estimate separate latent class models for Grades 7, 10, and 12. This step involves preparing the data in wide format and running independent LCAs using MplusAutomation. The goal is to identify the optimal number of latent profiles per grade based on model fit before moving to the longitudinal transition model.

26.4.2 Run LCA on 7th Grade Items

lca_belonging <- lapply(1:8, function(k) {

lca_enum <- mplusObject(

TITLE = glue("{k}-Class LCA for LSAY 7th Grade"),

VARIABLE = glue(

"categorical = AB39A AB39H AB39I AB39K AB39L AB39M AB39T AB39U AB39W AB39X;

usevar = AB39A AB39H AB39I AB39K AB39L AB39M AB39T AB39U AB39W AB39X;

missing = all(9999);

classes = c({k});"

),

ANALYSIS = "

estimator = mlr;

type = mixture;

starts = 500 10;

processors = 10;",

OUTPUT = "sampstat; residual; tech11; tech14;",

PLOT = "

type = plot3;

series = AB39A AB39H AB39I AB39K AB39L AB39M

AB39T AB39U AB39W AB39X(*);",

rdata = lsay_data

)

lca_enum_fit <- mplusModeler(

lca_enum,

dataout = glue(here("tc_lta","g7_enum", "lsay_g7.dat")),

modelout = glue(here("tc_lta","g7_enum", "c{k}_g7.inp")),

check = TRUE,

run = TRUE,

hashfilename = FALSE

)

})26.4.3 Run LCA on 10th Grade

lca_belonging <- lapply(1:8, function(k) {

lca_enum <- mplusObject(

TITLE = glue("{k}-Class LCA for LCA 10th Grade"),

VARIABLE = glue(

"categorical = GA32A GA32H GA32I GA32K GA32L GA33A GA33H GA33I GA33K GA33L;

usevar = GA32A GA32H GA32I GA32K GA32L GA33A GA33H GA33I GA33K GA33L;

missing = all(9999);

classes = c({k});"

),

ANALYSIS = "

estimator = mlr;

type = mixture;

starts = 500 10;

processors = 10;",

OUTPUT = "sampstat; residual; tech11; tech14;",

PLOT = "

type = plot3;

series = GA32A GA32H GA32I GA32K GA32L GA33A

GA33H GA33I GA33K GA33L (*);",

rdata = lsay_data

)

lca_enum_fit <- mplusModeler(

lca_enum,

dataout = glue(here("tc_lta","g10_enum", "lsay_g10.dat")),

modelout = glue(here("tc_lta","g10_enum", "c{k}_g10.inp")),

check = TRUE,

run = TRUE,

hashfilename = FALSE

)

})26.4.4 Run LCA for 12th Grade

lca_belonging <- lapply(1:8, function(k) {

lca_enum <- mplusObject(

TITLE = glue("{k}-Class LCA for LCA 12th Grade"),

VARIABLE = glue(

"categorical = KA46A KA46H KA46I KA46K KA46L KA47A KA47H KA47I KA47K KA47L;

usevar = KA46A KA46H KA46I KA46K KA46L KA47A KA47H KA47I KA47K KA47L;

missing = all(9999);

classes = c({k});"

),

ANALYSIS = "

estimator = mlr;

type = mixture;

starts = 500 10;

processors = 10;",

OUTPUT = "sampstat; residual; tech11; tech14;",

PLOT = "

type = plot3;

series = KA46A KA46H KA46I KA46K KA46L KA47A

KA47H KA47I KA47K KA47L (*);",

rdata = lsay_data

)

lca_enum_fit <- mplusModeler(

lca_enum,

dataout = glue(here("tc_lta","g12_enum", "lsay_g12.dat")),

modelout = glue(here("tc_lta","g12_enum", "c{k}_g12.inp")),

check = TRUE,

run = TRUE,

hashfilename = FALSE

)

})Extract Mplus Information

# LCA Extraction

source(here("tc_lta","functions", "extract_mplus_info.R"))

output_dir_lca <- here("tc_lta","enum")

output_files_lca <- list.files(output_dir_lca, pattern = "\\.out$", full.names = TRUE)

final_data_lca <- map_dfr(output_files_lca, extract_mplus_info_extended) %>%

mutate(Model_Type = "LCA")26.5 Screen Output for Warnings, Errors, and Loglikelihood Replication

After estimating each LCA model, we examine the Mplus output files for warnings, estimation errors, and loglikelihood replication issues. This quality check helps ensure that solutions are trustworthy and that selected models are not based on local maxima or convergence failures. In this step, we flag any estimation concerns and verify that the best loglikelihood value is replicated consistently across random starts.

Extract Warnings from Output Files

source(here("tc_lta","functions", "extract_mplus_info.R"))

# Extract 7th grade LCAs

output_dir_g7 <- here("tc_lta","g7_enum")

output_files_g7 <- list.files(output_dir_g7, pattern = "\\.out$", full.names = TRUE)

final_data_g7 <- map_dfr(output_files_g7, extract_mplus_info_extended) %>%

mutate(Model_Type = "LCA", Grade = "7th")

# Extract 10th grade LCAs

output_dir_g10 <- here("tc_lta","g10_enum")

output_files_g10 <- list.files(output_dir_g10, pattern = "\\.out$", full.names = TRUE)

final_data_g10 <- map_dfr(output_files_g10, extract_mplus_info_extended) %>%

mutate(Model_Type = "LCA", Grade = "10th")

# Extract 12th grade LCAs

output_dir_g12 <- here("tc_lta","g12_enum")

output_files_g12 <- list.files(output_dir_g12, pattern = "\\.out$", full.names = TRUE)

final_data_g12 <- map_dfr(output_files_g12, extract_mplus_info_extended) %>%

mutate(Model_Type = "LCA", Grade = "12th")26.5.1 Examine Output Warnings

source(here("tc_lta","functions", "extract_warnings.R"))

# ---- 7th Grade ----

warnings_g7 <- extract_warnings(final_data_g7) %>%

left_join(select(final_data_g7, File_Name), by = "File_Name")

warnings_table_g7 <- warnings_g7 %>%

gt() %>%

tab_header(title = md("**Model Warnings — 7th Grade LCA**")) %>%

cols_label(

File_Name = "Output File",

Warning_Summary = "# of Warnings",

Warnings = "Warning Message(s)"

) %>%

cols_align(align = "left", columns = everything()) %>%

cols_width(

File_Name ~ px(150),

Warning_Summary ~ px(150),

Warnings ~ px(400)

) %>%

tab_options(table.width = pct(100))

# ---- 10th Grade ----

warnings_g10 <- extract_warnings(final_data_g10) %>%

left_join(select(final_data_g10, File_Name), by = "File_Name")

warnings_table_g10 <- warnings_g10 %>%

gt() %>%

tab_header(title = md("**Model Warnings — 10th Grade LCA**")) %>%

cols_label(

File_Name = "Output File",

Warning_Summary = "# of Warnings",

Warnings = "Warning Message(s)"

) %>%

cols_align(align = "left", columns = everything()) %>%

cols_width(

File_Name ~ px(150),

Warning_Summary ~ px(150),

Warnings ~ px(400)

) %>%

tab_options(table.width = pct(100))

# ---- 12th Grade ----

warnings_g12 <- extract_warnings(final_data_g12) %>%

left_join(select(final_data_g12, File_Name), by = "File_Name")

warnings_table_g12 <- warnings_g12 %>%

gt() %>%

tab_header(title = md("**Model Warnings — 12th Grade LCA**")) %>%

cols_label(

File_Name = "Output File",

Warning_Summary = "# of Warnings",

Warnings = "Warning Message(s)"

) %>%

cols_align(align = "left", columns = everything()) %>%

cols_width(

File_Name ~ px(150),

Warning_Summary ~ px(150),

Warnings ~ px(400)

) %>%

tab_options(table.width = pct(100))

# Print all three

warnings_table_g7| Model Warnings — 7th Grade LCA | ||

| Output File | # of Warnings | Warning Message(s) |

|---|---|---|

| c1_g7.out | There are 4 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING in OUTPUT command TECH11 option is not available for TYPE=MIXTURE with only one class. Request for TECH11 is ignored. | ||

| *** WARNING in OUTPUT command TECH14 option is not available for TYPE=MIXTURE with only one class. Request for TECH14 is ignored. | ||

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 26 | ||

| c2_g7.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 26 | ||

| c3_g7.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 26 | ||

| c4_g7.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 26 | ||

| c5_g7.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 26 | ||

| c6_g7.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 26 | ||

| c7_g7.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 26 | ||

| c8_g7.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 26 | ||

warnings_table_g10| Model Warnings — 10th Grade LCA | ||

| Output File | # of Warnings | Warning Message(s) |

|---|---|---|

| c1_g10.out | There are 4 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING in OUTPUT command TECH11 option is not available for TYPE=MIXTURE with only one class. Request for TECH11 is ignored. | ||

| *** WARNING in OUTPUT command TECH14 option is not available for TYPE=MIXTURE with only one class. Request for TECH14 is ignored. | ||

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 378 | ||

| c2_g10.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 378 | ||

| c3_g10.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 378 | ||

| c4_g10.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 378 | ||

| c5_g10.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 378 | ||

| c6_g10.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 378 | ||

| c7_g10.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 378 | ||

| c8_g10.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 378 | ||

warnings_table_g12| Model Warnings — 12th Grade LCA | ||

| Output File | # of Warnings | Warning Message(s) |

|---|---|---|

| c1_g12.out | There are 4 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING in OUTPUT command TECH11 option is not available for TYPE=MIXTURE with only one class. Request for TECH11 is ignored. | ||

| *** WARNING in OUTPUT command TECH14 option is not available for TYPE=MIXTURE with only one class. Request for TECH14 is ignored. | ||

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 790 | ||

| c2_g12.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 790 | ||

| c3_g12.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 790 | ||

| c4_g12.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 790 | ||

| c5_g12.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 790 | ||

| c6_g12.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 790 | ||

| c7_g12.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 790 | ||

| c8_g12.out | There are 2 warnings in the output file. | *** WARNING in OUTPUT command SAMPSTAT option is not available when all outcomes are censored, ordered categorical, unordered categorical (nominal), count or continuous-time survival variables. Request for SAMPSTAT is ignored. |

| *** WARNING Data set contains cases with missing on all variables. These cases were not included in the analysis. Number of cases with missing on all variables: 790 | ||

Save Warning Tables

gtsave(warnings_table_g7, filename = here("tc_lta","figures", "warnings_g7_lca.png"))

gtsave(warnings_table_g10, filename = here("tc_lta","figures", "warnings_g10_lca.png"))

gtsave(warnings_table_g12, filename = here("tc_lta","figures", "warnings_g12_lca.png"))Extract Errors from Output Files

source(here("tc_lta","functions", "error_visualization.R"))

# ---- 7th Grade Errors ----

error_table_g7 <- process_error_data(final_data_g7)

# ---- 10th Grade Errors ----

error_table_g10 <- process_error_data(final_data_g10)

# ---- 12th Grade Errors ----

error_table_g12 <- process_error_data(final_data_g12)26.5.2 Examine Errors

# Helper to conditionally render or notify

render_error_table <- function(error_df, grade_label) {

if (nrow(error_df) > 0) {

error_df %>%

gt() %>%

tab_header(title = md(glue("**Model Estimation Errors — {grade_label} Grade**"))) %>%

cols_label(

File_Name = "Output File",

Class_Model = "Model Type",

Error_Message = "Error Message"

) %>%

cols_align(align = "left", columns = everything()) %>%

cols_width(

File_Name ~ px(150),

Class_Model ~ px(100),

Error_Message ~ px(400)

) %>%

tab_options(table.width = px(600)) %>%

fmt(

columns = "Error_Message",

fns = function(x) gsub("\n", "<br>", x)

)

} else {

cat(glue("✅ No errors detected for {grade_label} Grade.\n"))

}

}

# Print or notify for each grade

render_error_table(error_table_g7, "7th")| Model Estimation Errors — 7th Grade | ||

| Output File | Model Type | Error Message |

|---|---|---|

| c2_g7.out | 2-Class | RANDOM STARTS TO CHECK THAT THE BEST LOGLIKELIHOOD IS STILL OBTAINED AND REPLICATED. |

| c3_g7.out | 3-Class | RANDOM STARTS TO CHECK THAT THE BEST LOGLIKELIHOOD IS STILL OBTAINED AND REPLICATED. |

| c4_g7.out | 4-Class | RANDOM STARTS TO CHECK THAT THE BEST LOGLIKELIHOOD IS STILL OBTAINED AND REPLICATED. IN THE OPTIMIZATION, ONE OR MORE LOGIT THRESHOLDS APPROACHED EXTREME VALUES OF -15.000 AND 15.000 AND WERE FIXED TO STABILIZE MODEL ESTIMATION. THESE VALUES IMPLY PROBABILITIES OF 0 AND 1. IN THE MODEL RESULTS SECTION, THESE PARAMETERS HAVE 0 STANDARD ERRORS AND 999 IN THE Z-SCORE AND P-VALUE COLUMNS. |

| c5_g7.out | 5-Class | RANDOM STARTS TO CHECK THAT THE BEST LOGLIKELIHOOD IS STILL OBTAINED AND REPLICATED. IN THE OPTIMIZATION, ONE OR MORE LOGIT THRESHOLDS APPROACHED EXTREME VALUES OF -15.000 AND 15.000 AND WERE FIXED TO STABILIZE MODEL ESTIMATION. THESE VALUES IMPLY PROBABILITIES OF 0 AND 1. IN THE MODEL RESULTS SECTION, THESE PARAMETERS HAVE 0 STANDARD ERRORS AND 999 IN THE Z-SCORE AND P-VALUE COLUMNS. |

| c6_g7.out | 6-Class | RANDOM STARTS TO CHECK THAT THE BEST LOGLIKELIHOOD IS STILL OBTAINED AND REPLICATED. IN THE OPTIMIZATION, ONE OR MORE LOGIT THRESHOLDS APPROACHED EXTREME VALUES OF -15.000 AND 15.000 AND WERE FIXED TO STABILIZE MODEL ESTIMATION. THESE VALUES IMPLY PROBABILITIES OF 0 AND 1. IN THE MODEL RESULTS SECTION, THESE PARAMETERS HAVE 0 STANDARD ERRORS AND 999 IN THE Z-SCORE AND P-VALUE COLUMNS. |

| c7_g7.out | 7-Class | RANDOM STARTS TO CHECK THAT THE BEST LOGLIKELIHOOD IS STILL OBTAINED AND REPLICATED. IN THE OPTIMIZATION, ONE OR MORE LOGIT THRESHOLDS APPROACHED EXTREME VALUES OF -15.000 AND 15.000 AND WERE FIXED TO STABILIZE MODEL ESTIMATION. THESE VALUES IMPLY PROBABILITIES OF 0 AND 1. IN THE MODEL RESULTS SECTION, THESE PARAMETERS HAVE 0 STANDARD ERRORS AND 999 IN THE Z-SCORE AND P-VALUE COLUMNS. |

| c8_g7.out | 8-Class | SOLUTION MAY NOT BE TRUSTWORTHY DUE TO LOCAL MAXIMA. INCREASE THE NUMBER OF RANDOM STARTS. IN THE OPTIMIZATION, ONE OR MORE LOGIT THRESHOLDS APPROACHED EXTREME VALUES OF -15.000 AND 15.000 AND WERE FIXED TO STABILIZE MODEL ESTIMATION. THESE VALUES IMPLY PROBABILITIES OF 0 AND 1. IN THE MODEL RESULTS SECTION, THESE PARAMETERS HAVE 0 STANDARD ERRORS AND 999 IN THE Z-SCORE AND P-VALUE COLUMNS. |

render_error_table(error_table_g10, "10th")| Model Estimation Errors — 10th Grade | ||

| Output File | Model Type | Error Message |

|---|---|---|

| c2_g10.out | 2-Class | RANDOM STARTS TO CHECK THAT THE BEST LOGLIKELIHOOD IS STILL OBTAINED AND REPLICATED. |

| c3_g10.out | 3-Class | RANDOM STARTS TO CHECK THAT THE BEST LOGLIKELIHOOD IS STILL OBTAINED AND REPLICATED. |

| c4_g10.out | 4-Class | RANDOM STARTS TO CHECK THAT THE BEST LOGLIKELIHOOD IS STILL OBTAINED AND REPLICATED. |

| c5_g10.out | 5-Class | RANDOM STARTS TO CHECK THAT THE BEST LOGLIKELIHOOD IS STILL OBTAINED AND REPLICATED. IN THE OPTIMIZATION, ONE OR MORE LOGIT THRESHOLDS APPROACHED EXTREME VALUES OF -15.000 AND 15.000 AND WERE FIXED TO STABILIZE MODEL ESTIMATION. THESE VALUES IMPLY PROBABILITIES OF 0 AND 1. IN THE MODEL RESULTS SECTION, THESE PARAMETERS HAVE 0 STANDARD ERRORS AND 999 IN THE Z-SCORE AND P-VALUE COLUMNS. |

| c6_g10.out | 6-Class | RANDOM STARTS TO CHECK THAT THE BEST LOGLIKELIHOOD IS STILL OBTAINED AND REPLICATED. IN THE OPTIMIZATION, ONE OR MORE LOGIT THRESHOLDS APPROACHED EXTREME VALUES OF -15.000 AND 15.000 AND WERE FIXED TO STABILIZE MODEL ESTIMATION. THESE VALUES IMPLY PROBABILITIES OF 0 AND 1. IN THE MODEL RESULTS SECTION, THESE PARAMETERS HAVE 0 STANDARD ERRORS AND 999 IN THE Z-SCORE AND P-VALUE COLUMNS. |

| c7_g10.out | 7-Class | SOLUTION MAY NOT BE TRUSTWORTHY DUE TO LOCAL MAXIMA. INCREASE THE NUMBER OF RANDOM STARTS. IN THE OPTIMIZATION, ONE OR MORE LOGIT THRESHOLDS APPROACHED EXTREME VALUES OF -15.000 AND 15.000 AND WERE FIXED TO STABILIZE MODEL ESTIMATION. THESE VALUES IMPLY PROBABILITIES OF 0 AND 1. IN THE MODEL RESULTS SECTION, THESE PARAMETERS HAVE 0 STANDARD ERRORS AND 999 IN THE Z-SCORE AND P-VALUE COLUMNS. |

| c8_g10.out | 8-Class | RANDOM STARTS TO CHECK THAT THE BEST LOGLIKELIHOOD IS STILL OBTAINED AND REPLICATED. IN THE OPTIMIZATION, ONE OR MORE LOGIT THRESHOLDS APPROACHED EXTREME VALUES OF -15.000 AND 15.000 AND WERE FIXED TO STABILIZE MODEL ESTIMATION. THESE VALUES IMPLY PROBABILITIES OF 0 AND 1. IN THE MODEL RESULTS SECTION, THESE PARAMETERS HAVE 0 STANDARD ERRORS AND 999 IN THE Z-SCORE AND P-VALUE COLUMNS. THE STANDARD ERRORS OF THE MODEL PARAMETER ESTIMATES MAY NOT BE TRUSTWORTHY FOR SOME PARAMETERS DUE TO A NON-POSITIVE DEFINITE FIRST-ORDER DERIVATIVE PRODUCT MATRIX. THIS MAY BE DUE TO THE STARTING VALUES BUT MAY ALSO BE AN INDICATION OF MODEL NONIDENTIFICATION. THE CONDITION NUMBER IS 0.526D-11. PROBLEM INVOLVING THE FOLLOWING PARAMETER: Parameter 28, %C#3%: [ GA33I$1 ] |

render_error_table(error_table_g12, "12th")| Model Estimation Errors — 12th Grade | ||

| Output File | Model Type | Error Message |

|---|---|---|

| c2_g12.out | 2-Class | RANDOM STARTS TO CHECK THAT THE BEST LOGLIKELIHOOD IS STILL OBTAINED AND REPLICATED. |

| c3_g12.out | 3-Class | RANDOM STARTS TO CHECK THAT THE BEST LOGLIKELIHOOD IS STILL OBTAINED AND REPLICATED. |

| c4_g12.out | 4-Class | RANDOM STARTS TO CHECK THAT THE BEST LOGLIKELIHOOD IS STILL OBTAINED AND REPLICATED. |

| c5_g12.out | 5-Class | RANDOM STARTS TO CHECK THAT THE BEST LOGLIKELIHOOD IS STILL OBTAINED AND REPLICATED. IN THE OPTIMIZATION, ONE OR MORE LOGIT THRESHOLDS APPROACHED EXTREME VALUES OF -15.000 AND 15.000 AND WERE FIXED TO STABILIZE MODEL ESTIMATION. THESE VALUES IMPLY PROBABILITIES OF 0 AND 1. IN THE MODEL RESULTS SECTION, THESE PARAMETERS HAVE 0 STANDARD ERRORS AND 999 IN THE Z-SCORE AND P-VALUE COLUMNS. |

| c6_g12.out | 6-Class | RANDOM STARTS TO CHECK THAT THE BEST LOGLIKELIHOOD IS STILL OBTAINED AND REPLICATED. IN THE OPTIMIZATION, ONE OR MORE LOGIT THRESHOLDS APPROACHED EXTREME VALUES OF -15.000 AND 15.000 AND WERE FIXED TO STABILIZE MODEL ESTIMATION. THESE VALUES IMPLY PROBABILITIES OF 0 AND 1. IN THE MODEL RESULTS SECTION, THESE PARAMETERS HAVE 0 STANDARD ERRORS AND 999 IN THE Z-SCORE AND P-VALUE COLUMNS. THE STANDARD ERRORS OF THE MODEL PARAMETER ESTIMATES MAY NOT BE TRUSTWORTHY FOR SOME PARAMETERS DUE TO A NON-POSITIVE DEFINITE FIRST-ORDER DERIVATIVE PRODUCT MATRIX. THIS MAY BE DUE TO THE STARTING VALUES BUT MAY ALSO BE AN INDICATION OF MODEL NONIDENTIFICATION. THE CONDITION NUMBER IS 0.212D-11. PROBLEM INVOLVING THE FOLLOWING PARAMETER: Parameter 39, %C#4%: [ KA47K$1 ] |

| c7_g12.out | 7-Class | SOLUTION MAY NOT BE TRUSTWORTHY DUE TO LOCAL MAXIMA. INCREASE THE NUMBER OF RANDOM STARTS. IN THE OPTIMIZATION, ONE OR MORE LOGIT THRESHOLDS APPROACHED EXTREME VALUES OF -15.000 AND 15.000 AND WERE FIXED TO STABILIZE MODEL ESTIMATION. THESE VALUES IMPLY PROBABILITIES OF 0 AND 1. IN THE MODEL RESULTS SECTION, THESE PARAMETERS HAVE 0 STANDARD ERRORS AND 999 IN THE Z-SCORE AND P-VALUE COLUMNS. THE STANDARD ERRORS OF THE MODEL PARAMETER ESTIMATES MAY NOT BE TRUSTWORTHY FOR SOME PARAMETERS DUE TO A NON-POSITIVE DEFINITE FIRST-ORDER DERIVATIVE PRODUCT MATRIX. THIS MAY BE DUE TO THE STARTING VALUES BUT MAY ALSO BE AN INDICATION OF MODEL NONIDENTIFICATION. THE CONDITION NUMBER IS 0.120D-13. PROBLEM INVOLVING THE FOLLOWING PARAMETER: Parameter 16, %C#2%: [ KA47A$1 ] |

| c8_g12.out | 8-Class | SOLUTION MAY NOT BE TRUSTWORTHY DUE TO LOCAL MAXIMA. INCREASE THE NUMBER OF RANDOM STARTS. IN THE OPTIMIZATION, ONE OR MORE LOGIT THRESHOLDS APPROACHED EXTREME VALUES OF -15.000 AND 15.000 AND WERE FIXED TO STABILIZE MODEL ESTIMATION. THESE VALUES IMPLY PROBABILITIES OF 0 AND 1. IN THE MODEL RESULTS SECTION, THESE PARAMETERS HAVE 0 STANDARD ERRORS AND 999 IN THE Z-SCORE AND P-VALUE COLUMNS. |

Save Error Tables

if (exists("error_table_g7") && nrow(error_table_g7) > 0) {

gtsave(render_error_table(error_table_g7, "7th"), here("tc_lta","figures", "errors_g7_lca.png"))

}

if (exists("error_table_g10") && nrow(error_table_g10) > 0) {

gtsave(render_error_table(error_table_g10, "10th"), here("tc_lta","figures", "errors_g10_lca.png"))

}

if (exists("error_table_g12") && nrow(error_table_g12) > 0) {

gtsave(render_error_table(error_table_g12, "12th"), here("tc_lta","figures", "errors_g12_lca.png"))

}26.5.3 Examine Convergence Information

# Load function

source(here("tc_lta","functions", "summary_table.R"))

# Helper: clean + prep for each dataset

prepare_convergence_table <- function(data_object, grade_label) {

sample_size <- data_object$Sample_Size[1]

data_flat <- data_object %>%

select(-LogLikelihoods, -Errors, -Warnings) %>%

mutate(across(

c(

Best_LogLikelihood,

Perc_Convergence,

Replicated_LL_Perc,

Smallest_Class_Perc,

Condition_Number

),

~ as.numeric(gsub(",", "", .))

))

tbl <- create_flextable(data_flat, sample_size)

# Get actual number of columns in the flextable object

n_cols <- length(tbl$body$col_keys)

# Title string

title_text <- glue("LCA Convergence Table — {grade_label} Grade (N = {sample_size})")

# This is the correct call: title as one string, colwidth = full span

tbl <- add_header_row(

tbl,

values = title_text,

colwidths = n_cols

) %>%

align(i = 1, align = "center", part = "header") %>%

fontsize(i = 1, size = 12, part = "header") %>%

bg(i = 1, bg = "#ffffff", part = "header")

return(tbl)

}

# Create tables

summary_table_g7 <- prepare_convergence_table(final_data_g7, "7th")

summary_table_g10 <- prepare_convergence_table(final_data_g10, "10th")

summary_table_g12 <- prepare_convergence_table(final_data_g12, "12th")

summary_table_g7 LCA Convergence Table — 7th Grade (N = 1886) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

N = 1886 |

Random Starts |

Final starts converging |

LL Replication |

Smallest Class |

||||||

Model |

Best LL |

npar |

Initial |

Final |

𝒇 |

% |

𝒇 |

% |

𝒇 |

% |

1-Class |

-11,803.429 |

10 |

500 |

10 |

10 |

100% |

10 |

100% |

1,886 |

100.0% |

2-Class |

-10,418.761 |

21 |

500 |

10 |

10 |

100% |

10 |

100% |

782 |

41.5% |

3-Class |

-10,165.874 |

32 |

500 |

10 |

10 |

100% |

10 |

100% |

384 |

20.4% |

4-Class |

-10,042.970 |

43 |

500 |

10 |

10 |

100% |

10 |

100% |

390 |

20.7% |

5-Class |

-9,969.239 |

54 |

500 |

10 |

10 |

100% |

6 |

60% |

175 |

9.3% |

6-Class |

-9,915.148 |

65 |

500 |

10 |

10 |

100% |

8 |

80% |

180 |

9.5% |

7-Class |

-9,891.204 |

76 |

500 |

10 |

10 |

100% |

5 |

50% |

47 |

2.5% |

8-Class |

-9,871.411 |

87 |

500 |

10 |

10 |

100% |

1 |

10% |

55 |

2.9% |

summary_table_g10LCA Convergence Table — 10th Grade (N = 1534) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

N = 1534 |

Random Starts |

Final starts converging |

LL Replication |

Smallest Class |

||||||

Model |

Best LL |

npar |

Initial |

Final |

𝒇 |

% |

𝒇 |

% |

𝒇 |

% |

1-Class |

-10,072.926 |

10 |

500 |

10 |

10 |

100% |

10 |

100% |

1,534 |

100.0% |

2-Class |

-8,428.384 |

21 |

500 |

10 |

10 |

100% |

10 |

100% |

658 |

42.9% |

3-Class |

-8,067.612 |

32 |

500 |

10 |

10 |

100% |

10 |

100% |

297 |

19.4% |

4-Class |

-7,905.535 |

43 |

500 |

10 |

10 |

100% |

10 |

100% |

290 |

18.9% |

5-Class |

-7,845.441 |

54 |

500 |

10 |

10 |

100% |

10 |

100% |

220 |

14.3% |

6-Class |

-7,806.987 |

65 |

500 |

10 |

10 |

100% |

3 |

30% |

130 |

8.5% |

7-Class |

-7,779.821 |

76 |

500 |

10 |

10 |

100% |

1 |

10% |

62 |

4.1% |

8-Class |

-7,754.190 |

87 |

500 |

10 |

10 |

100% |

2 |

20% |

84 |

5.5% |

summary_table_g12LCA Convergence Table — 12th Grade (N = 1122) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

N = 1122 |

Random Starts |

Final starts converging |

LL Replication |

Smallest Class |

||||||

Model |

Best LL |

npar |

Initial |

Final |

𝒇 |

% |

𝒇 |

% |

𝒇 |

% |

1-Class |

-7,349.129 |

10 |

500 |

10 |

10 |

100% |

10 |

100% |

1,122 |

100.0% |

2-Class |

-5,976.598 |

21 |

500 |

10 |

10 |

100% |

10 |

100% |

534 |

47.5% |

3-Class |

-5,670.595 |

32 |

500 |

10 |

10 |

100% |

10 |

100% |

203 |

18.1% |

4-Class |

-5,543.616 |

43 |

500 |

10 |

10 |

100% |

10 |

100% |

219 |

19.5% |

5-Class |

-5,483.669 |

54 |

500 |

10 |

10 |

100% |

9 |

90% |

131 |

11.7% |

6-Class |

-5,444.055 |

65 |

500 |

10 |

10 |

100% |

5 |

50% |

81 |

7.2% |

7-Class |

-5,420.551 |

76 |

500 |

10 |

10 |

100% |

1 |

10% |

44 |

3.9% |

8-Class |

-5,395.532 |

87 |

500 |

10 |

10 |

100% |

1 |

10% |

61 |

5.4% |

Save Convergence Tables

# Save convergence tables as PNGs

invisible(save_as_image(summary_table_g7, path = here("tc_lta","figures", "convergence_g7_lca.png")))

invisible(save_as_image(summary_table_g10, path = here("tc_lta","figures", "convergence_g10_lca.png")))

invisible(save_as_image(summary_table_g12, path = here("tc_lta","figures", "convergence_g12_lca.png")))Scrape Replication Data

26.5.4 Examine Loglikelihood Replication Information

# Generate replication plots (invisible, for diagnostic use)

ll_replication_tables_g7 <- generate_ll_replication_plots(final_data_g7)

ll_replication_tables_g10 <- generate_ll_replication_plots(final_data_g10)

ll_replication_tables_g12 <- generate_ll_replication_plots(final_data_g12)

# Create replication tables

ll_replication_table_g7 <- create_ll_replication_table_all(final_data_g7)

ll_replication_table_g10 <- create_ll_replication_table_all(final_data_g10)

ll_replication_table_g12 <- create_ll_replication_table_all(final_data_g12)

# Add visible title row to each table

ll_replication_table_g7 <- add_header_row(

ll_replication_table_g7,

values = "Log-Likelihood Replication Table — 7th Grade LCA",

colwidths = ncol(ll_replication_table_g7$body$dataset)

) %>%

align(i = 1, align = "center", part = "header") %>%

fontsize(i = 1, size = 12, part = "header") %>%

bg(i = 1, bg = "#ffffff", part = "header")

ll_replication_table_g10 <- add_header_row(

ll_replication_table_g10,

values = "Log-Likelihood Replication Table — 10th Grade LCA",

colwidths = ncol(ll_replication_table_g10$body$dataset)

) %>%

align(i = 1, align = "center", part = "header") %>%

fontsize(i = 1, size = 12, part = "header") %>%

bg(i = 1, bg = "#ffffff", part = "header")

ll_replication_table_g12 <- add_header_row(

ll_replication_table_g12,

values = "Log-Likelihood Replication Table — 12th Grade LCA",

colwidths = ncol(ll_replication_table_g12$body$dataset)

) %>%

align(i = 1, align = "center", part = "header") %>%

fontsize(i = 1, size = 12, part = "header") %>%

bg(i = 1, bg = "#ffffff", part = "header")

# Display the three titled tables

ll_replication_table_g7Log-Likelihood Replication Table — 7th Grade LCA | |||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

1-Class |

2-Class |

3-Class |

4-Class |

5-Class |

6-Class |

7-Class |

8-Class |

||||||||||||||||

LL_c1 |

n_c1 |

perc_c1 |

LL_c2 |

n_c2 |

perc_c2 |

LL_c3 |

n_c3 |

perc_c3 |

LL_c4 |

n_c4 |

perc_c4 |

LL_c5 |

n_c5 |

perc_c5 |

LL_c6 |

n_c6 |

perc_c6 |

LL_c7 |

n_c7 |

perc_c7 |

LL_c8 |

n_c8 |

perc_c8 |

-11803.429 |

10 |

100 |

-10418.761 |

10 |

100 |

-10165.874 |

10 |

100 |

-10042.97 |

10 |

100 |

-9969.239 |

6 |

60 |

-9915.148 |

8 |

80 |

-9891.204 |

5 |

50 |

-9,871.411 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-9969.697 |

4 |

40 |

-9915.778 |

1 |

10 |

-9893.864 |

1 |

10 |

-9,872.124 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-9916.262 |

1 |

10 |

-9894.265 |

1 |

10 |

-9,874.200 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-9898.309 |

1 |

10 |

-9,876.173 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-9904.214 |

1 |

10 |

-9,876.914 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-9904.532 |

1 |

10 |

-9,877.583 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-9,877.741 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-9,877.806 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-9,879.960 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-9,888.932 |

1 |

10 |

ll_replication_table_g10Log-Likelihood Replication Table — 10th Grade LCA | |||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

1-Class |

2-Class |

3-Class |

4-Class |

5-Class |

6-Class |

7-Class |

8-Class |

||||||||||||||||

LL_c1 |

n_c1 |

perc_c1 |

LL_c2 |

n_c2 |

perc_c2 |

LL_c3 |

n_c3 |

perc_c3 |

LL_c4 |

n_c4 |

perc_c4 |

LL_c5 |

n_c5 |

perc_c5 |

LL_c6 |

n_c6 |

perc_c6 |

LL_c7 |

n_c7 |

perc_c7 |

LL_c8 |

n_c8 |

perc_c8 |

-10072.926 |

10 |

100 |

-8428.384 |

10 |

100 |

-8067.612 |

10 |

100 |

-7905.535 |

10 |

100 |

-7845.441 |

10 |

100 |

-7806.987 |

3 |

30 |

-7779.821 |

1 |

10 |

-7,754.190 |

2 |

20 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-7807.464 |

4 |

40 |

-7780.664 |

5 |

50 |

-7,754.206 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-7807.485 |

1 |

10 |

-7781.893 |

1 |

10 |

-7,757.915 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-7808.751 |

1 |

10 |

-7783.866 |

1 |

10 |

-7,758.288 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-7820.453 |

1 |

10 |

-7784.195 |

1 |

10 |

-7,759.634 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-7784.933 |

1 |

10 |

-7,759.730 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-7,760.773 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-7,762.451 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-7,762.777 |

1 |

10 |

ll_replication_table_g12Log-Likelihood Replication Table — 12th Grade LCA | |||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

1-Class |

2-Class |

3-Class |

4-Class |

5-Class |

6-Class |

7-Class |

8-Class |

||||||||||||||||

LL_c1 |

n_c1 |

perc_c1 |

LL_c2 |

n_c2 |

perc_c2 |

LL_c3 |

n_c3 |

perc_c3 |

LL_c4 |

n_c4 |

perc_c4 |

LL_c5 |

n_c5 |

perc_c5 |

LL_c6 |

n_c6 |

perc_c6 |

LL_c7 |

n_c7 |

perc_c7 |

LL_c8 |

n_c8 |

perc_c8 |

-7349.129 |

10 |

100 |

-5976.598 |

10 |

100 |

-5670.595 |

10 |

100 |

-5543.616 |

10 |

100 |

-5483.669 |

9 |

90 |

-5444.055 |

5 |

50 |

-5420.551 |

1 |

10 |

-5,395.532 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-5484.101 |

1 |

10 |

-5446.399 |

4 |

40 |

-5421.469 |

1 |

10 |

-5,397.310 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-5446.424 |

1 |

10 |

-5421.554 |

2 |

20 |

-5,397.311 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-5421.845 |

1 |

10 |

-5,398.708 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-5422.176 |

1 |

10 |

-5,399.930 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-5423.003 |

1 |

10 |

-5,400.458 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-5424.777 |

1 |

10 |

-5,400.463 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-5426.79 |

1 |

10 |

-5,401.156 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-5427.065 |

1 |

10 |

-5,403.971 |

1 |

10 |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

— |

-5,405.328 |

1 |

10 |

Save LL Replication Tables

invisible({

flextable::save_as_image(ll_replication_table_g7, path = here::here("tc_lta","figures", "ll_replication_table_g7.png"))

flextable::save_as_image(ll_replication_table_g10, path = here::here("tc_lta","figures", "ll_replication_table_g10.png"))

flextable::save_as_image(ll_replication_table_g12, path = here::here("tc_lta","figures", "ll_replication_table_g12.png"))

})26.6 Examine Model Fit for Optimal Solution

We evaluate model fit for each grade-level LCA to identify the optimal number of latent profiles. Fit statistics such as BIC, entropy, and likelihood ratio tests (LMR and BLRT) are compared across models. The goal is to select the most parsimonious solution that provides clear separation between classes and replicates reliably. These selections will form the basis for the longitudinal model in the next stage.

Extract fit statistics

# Define grade-specific folders and data

grades <- c("g7", "g10", "g12")

folder_paths <- c(

g7 = here("tc_lta","g7_enum"),

g10 = here("tc_lta","g10_enum"),

g12 = here("tc_lta","g12_enum")

)

final_data_list <- list(

g7 = final_data_g7,

g10 = final_data_g10,

g12 = final_data_g12

)

# Initialize storage

output_models_all <- list()

allFit_list <- list()

# Process each grade

for (grade in grades) {

# Get all .out files (1-8 classes)

out_files <- list.files(folder_paths[grade], pattern = "^c[1-8]_g\\d+\\.out$", full.names = TRUE)

# Read Mplus output files

output_models <- list()

for (file in out_files) {

model <- readModels(file, quiet = TRUE)

if (!is.null(model) && length(model) > 0) {

output_models[[basename(file)]] <- model

}

}

# Store models

output_models_all[[grade]] <- output_models

# Extract summary table

model_extract <- LatexSummaryTable(

output_models,

keepCols = c("Title", "Parameters", "LL", "BIC", "aBIC", "T11_VLMR_PValue", "BLRT_PValue", "Observations", "Entropy"),

sortBy = "Title"

)

# Compute additional fit indices

allFit <- model_extract %>%

mutate(

Title = str_trim(Title),

Grade = toupper(grade),

Classes = as.integer(str_extract(Title, "\\d+")), # Extract class number

File_Name = names(output_models),

CAIC = -2 * LL + Parameters * (log(Observations) + 1),

AWE = -2 * LL + 2 * Parameters * (log(Observations) + 1.5),

SIC = -0.5 * BIC,

expSIC = exp(SIC - max(SIC, na.rm = TRUE)),

BF = if_else(is.na(lead(SIC)), NA_real_, exp(SIC - lead(SIC))),

cmPk = expSIC / sum(expSIC, na.rm = TRUE)

) %>%

arrange(Classes)

# Clean Class_Model for joining

final_data_clean <- final_data_list[[grade]] %>%

mutate(

Classes = as.integer(str_extract(Class_Model, "\\d+"))

) %>%

select(Class_Model, Classes, Perc_Convergence, Replicated_LL_Perc, Smallest_Class, Smallest_Class_Perc)

# Merge with final_data

allFit <- allFit %>%

left_join(

final_data_clean,

by = "Classes"

) %>%

mutate(

Smallest_Class_Combined = paste0(Smallest_Class, "\u00A0(", Smallest_Class_Perc, "%)")

) %>%

relocate(Grade, Classes, Parameters, LL, Perc_Convergence, Replicated_LL_Perc, .before = BIC) %>%

select(

Grade, Classes, Parameters, LL, Perc_Convergence, Replicated_LL_Perc,

BIC, aBIC, CAIC, AWE, T11_VLMR_PValue, BLRT_PValue, Entropy, Smallest_Class_Combined, BF, cmPk

)

# Store fit data

allFit_list[[grade]] <- allFit

}

# Combine fit data

allFit_combined <- bind_rows(allFit_list) %>%

arrange(Grade, Classes)26.6.1 Examine Fit Statistics

# Initialize list to store tables

fit_tables <- list()

# Create and render table for each grade

for (grade in grades) {

# Filter data for current grade

allFit_grade <- allFit_combined %>%

filter(Grade == toupper(grade)) %>%

select(-Perc_Convergence, -Replicated_LL_Perc) # Exclude convergence columns

# Create table

fit_table <- allFit_grade %>%

gt() %>%

tab_header(title = md(sprintf("**Model Fit Summary Table for %s Grade**", toupper(grade)))) %>%

tab_spanner(label = "Model Fit Indices", columns = c(BIC, aBIC, CAIC, AWE)) %>%

tab_spanner(label = "LRTs", columns = c(T11_VLMR_PValue, BLRT_PValue)) %>%

tab_spanner(label = md("Smallest\u00A0Class"), columns = c(Smallest_Class_Combined)) %>%

cols_label(

Grade = "Grade",

Classes = "Classes",

Parameters = md("npar"),

LL = md("*LL*"),

# Perc_Convergence = "% Converged", # Commented out

# Replicated_LL_Perc = "% Replicated", # Commented out

BIC = "BIC",

aBIC = "aBIC",

CAIC = "CAIC",

AWE = "AWE",

T11_VLMR_PValue = "VLMR",

BLRT_PValue = "BLRT",

Entropy = "Entropy",

Smallest_Class_Combined = "n (%)",

BF = "BF",

cmPk = "cmPk"

) %>%

tab_footnote(

footnote = md(

"*Note.* npar = Parameters; *LL* = model log likelihood;

BIC = Bayesian information criterion;

aBIC = sample size adjusted BIC; CAIC = consistent Akaike information criterion;

AWE = approximate weight of evidence criterion;

BLRT = bootstrapped likelihood ratio test p-value;

VLMR = Vuong-Lo-Mendell-Rubin adjusted likelihood ratio test p-value;

Smallest n (%) = Number of cases in the smallest class."

),

locations = cells_title()

) %>%

tab_options(column_labels.font.weight = "bold") %>%

fmt_number(

columns = c(LL, BIC, aBIC, CAIC, AWE, Entropy, BF, cmPk),

decimals = 2

) %>%

fmt(

columns = c(T11_VLMR_PValue, BLRT_PValue),

fns = function(x) ifelse(is.na(x), "—", ifelse(x < 0.001, "<.001", scales::number(x, accuracy = .01)))

) %>%

cols_align(align = "center", columns = everything()) %>%

tab_style(

style = list(cell_text(weight = "bold")),

locations = list(

cells_body(columns = BIC, rows = BIC == min(BIC)),

cells_body(columns = aBIC, rows = aBIC == min(aBIC)),

cells_body(columns = CAIC, rows = CAIC == min(CAIC)),

cells_body(columns = AWE, rows = AWE == min(AWE)),

cells_body(

columns = T11_VLMR_PValue,

rows = T11_VLMR_PValue < .05 & lead(T11_VLMR_PValue, default = 1) > .05

),

cells_body(

columns = BLRT_PValue,

rows = BLRT_PValue < .05 & lead(BLRT_PValue, default = 1) > .05

)

)

)

# Store table

fit_tables[[grade]] <- fit_table

# Render table without markdown header

# cat(sprintf("\n### LCA Fit Table for %s Grade\n", toupper(grade))) # Commented out to avoid markdown headers

print(fit_table)

}| Model Fit Summary Table for G7 Grade1 | |||||||||||||

| Grade | Classes | npar | LL |

Model Fit Indices

|

LRTs

|

Entropy |

Smallest Class

|

BF | cmPk | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BIC | aBIC | CAIC | AWE | VLMR | BLRT | n (%) | |||||||

| G7 | 1 | 10 | −11,803.43 | 23,682.28 | 23,650.51 | 23,692.28 | 23,787.70 | — | — | NA | 1886 (100%) | 0.00 | 0.00 |

| G7 | 2 | 21 | −10,418.76 | 20,995.91 | 20,929.19 | 21,016.91 | 21,217.29 | <.001 | <.001 | 0.81 | 782 (41.5%) | 0.00 | 0.00 |

| G7 | 3 | 32 | −10,165.87 | 20,573.10 | 20,471.44 | 20,605.10 | 20,910.45 | 0.00 | <.001 | 0.75 | 384 (20.4%) | 0.00 | 0.00 |

| G7 | 4 | 43 | −10,042.97 | 20,410.26 | 20,273.64 | 20,453.26 | 20,863.57 | 0.00 | <.001 | 0.70 | 390 (20.7%) | 0.00 | 0.00 |

| G7 | 5 | 54 | −9,969.24 | 20,345.76 | 20,174.20 | 20,399.76 | 20,915.04 | 0.04 | <.001 | 0.76 | 175 (9.3%) | 0.00 | 0.00 |

| G7 | 6 | 65 | −9,915.15 | 20,320.54 | 20,114.04 | 20,385.54 | 21,005.78 | 0.01 | <.001 | 0.77 | 180 (9.5%) | 41,346,568.94 | 1.00 |

| G7 | 7 | 76 | −9,891.20 | 20,355.62 | 20,114.16 | 20,431.62 | 21,156.82 | 0.08 | <.001 | 0.77 | 47 (2.5%) | 2,628,029,072.23 | 0.00 |

| G7 | 8 | 87 | −9,871.41 | 20,398.99 | 20,122.60 | 20,485.99 | 21,316.17 | 0.36 | <.001 | 0.75 | 55 (2.9%) | NA | 0.00 |

| 1 Note. npar = Parameters; LL = model log likelihood; BIC = Bayesian information criterion; aBIC = sample size adjusted BIC; CAIC = consistent Akaike information criterion; AWE = approximate weight of evidence criterion; BLRT = bootstrapped likelihood ratio test p-value; VLMR = Vuong-Lo-Mendell-Rubin adjusted likelihood ratio test p-value; Smallest n (%) = Number of cases in the smallest class. | |||||||||||||

| Model Fit Summary Table for G10 Grade1 | |||||||||||||

| Grade | Classes | npar | LL |

Model Fit Indices

|

LRTs

|

Entropy |

Smallest Class

|

BF | cmPk | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BIC | aBIC | CAIC | AWE | VLMR | BLRT | n (%) | |||||||

| G10 | 1 | 10 | −10,072.93 | 20,219.21 | 20,187.44 | 20,229.21 | 20,322.56 | — | — | NA | 1534 (100%) | 0.00 | 0.00 |

| G10 | 2 | 21 | −8,428.38 | 17,010.82 | 16,944.10 | 17,031.82 | 17,227.86 | <.001 | <.001 | 0.86 | 658 (42.9%) | 0.00 | 0.00 |

| G10 | 3 | 32 | −8,067.61 | 16,369.96 | 16,268.31 | 16,401.96 | 16,700.70 | <.001 | <.001 | 0.83 | 297 (19.4%) | 0.00 | 0.00 |

| G10 | 4 | 43 | −7,905.53 | 16,126.50 | 15,989.90 | 16,169.50 | 16,570.93 | <.001 | <.001 | 0.79 | 290 (18.9%) | 0.00 | 0.00 |

| G10 | 5 | 54 | −7,845.44 | 16,087.01 | 15,915.46 | 16,141.01 | 16,645.13 | 0.01 | <.001 | 0.78 | 220 (14.3%) | 6.63 | 0.87 |

| G10 | 6 | 65 | −7,806.99 | 16,090.79 | 15,884.30 | 16,155.79 | 16,762.61 | 0.02 | <.001 | 0.80 | 130 (8.5%) | 529,929.89 | 0.13 |

| G10 | 7 | 76 | −7,779.82 | 16,117.15 | 15,875.72 | 16,193.15 | 16,902.66 | 0.53 | <.001 | 0.85 | 62 (4.1%) | 2,455,890.54 | 0.00 |

| G10 | 8 | 87 | −7,754.19 | 16,146.58 | 15,870.20 | 16,233.58 | 17,045.78 | 0.32 | <.001 | 0.80 | 84 (5.5%) | NA | 0.00 |

| 1 Note. npar = Parameters; LL = model log likelihood; BIC = Bayesian information criterion; aBIC = sample size adjusted BIC; CAIC = consistent Akaike information criterion; AWE = approximate weight of evidence criterion; BLRT = bootstrapped likelihood ratio test p-value; VLMR = Vuong-Lo-Mendell-Rubin adjusted likelihood ratio test p-value; Smallest n (%) = Number of cases in the smallest class. | |||||||||||||

| Model Fit Summary Table for G12 Grade1 | |||||||||||||

| Grade | Classes | npar | LL |

Model Fit Indices

|

LRTs

|

Entropy |

Smallest Class

|

BF | cmPk | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BIC | aBIC | CAIC | AWE | VLMR | BLRT | n (%) | |||||||

| G12 | 1 | 10 | −7,349.13 | 14,768.49 | 14,736.72 | 14,778.49 | 14,868.72 | — | — | NA | 1122 (100%) | 0.00 | 0.00 |

| G12 | 2 | 21 | −5,976.60 | 12,100.68 | 12,033.98 | 12,121.68 | 12,311.16 | <.001 | <.001 | 0.86 | 534 (47.5%) | 0.00 | 0.00 |

| G12 | 3 | 32 | −5,670.60 | 11,565.92 | 11,464.28 | 11,597.92 | 11,886.65 | <.001 | <.001 | 0.85 | 203 (18.1%) | 0.00 | 0.00 |

| G12 | 4 | 43 | −5,543.62 | 11,389.22 | 11,252.64 | 11,432.22 | 11,820.20 | 0.00 | <.001 | 0.80 | 219 (19.5%) | 0.00 | 0.00 |

| G12 | 5 | 54 | −5,483.67 | 11,346.57 | 11,175.06 | 11,400.57 | 11,887.81 | 0.00 | <.001 | 0.84 | 131 (11.7%) | 0.37 | 0.27 |

| G12 | 6 | 65 | −5,444.06 | 11,344.60 | 11,138.14 | 11,409.60 | 11,996.08 | 0.09 | <.001 | 0.84 | 81 (7.2%) | 3,693,185.79 | 0.73 |

| G12 | 7 | 76 | −5,420.55 | 11,374.84 | 11,133.44 | 11,450.84 | 12,136.58 | 0.03 | <.001 | 0.85 | 44 (3.9%) | 810,981.08 | 0.00 |

| G12 | 8 | 87 | −5,395.53 | 11,402.05 | 11,125.72 | 11,489.05 | 12,274.04 | 0.10 | <.001 | 0.84 | 61 (5.4%) | NA | 0.00 |

| 1 Note. npar = Parameters; LL = model log likelihood; BIC = Bayesian information criterion; aBIC = sample size adjusted BIC; CAIC = consistent Akaike information criterion; AWE = approximate weight of evidence criterion; BLRT = bootstrapped likelihood ratio test p-value; VLMR = Vuong-Lo-Mendell-Rubin adjusted likelihood ratio test p-value; Smallest n (%) = Number of cases in the smallest class. | |||||||||||||

Save Fit Tables

# Save tables as PNG files

gtsave(fit_tables[["g7"]], filename = here("tc_lta","figures", "fit_table_lca_g7.png"))

gtsave(fit_tables[["g10"]], filename = here("tc_lta","figures", "fit_table_lca_g10.png"))

gtsave(fit_tables[["g12"]], filename = here("tc_lta","figures", "fit_table_lca_g12.png"))26.7 Create and Examine Probability Plots for Four Class Solution

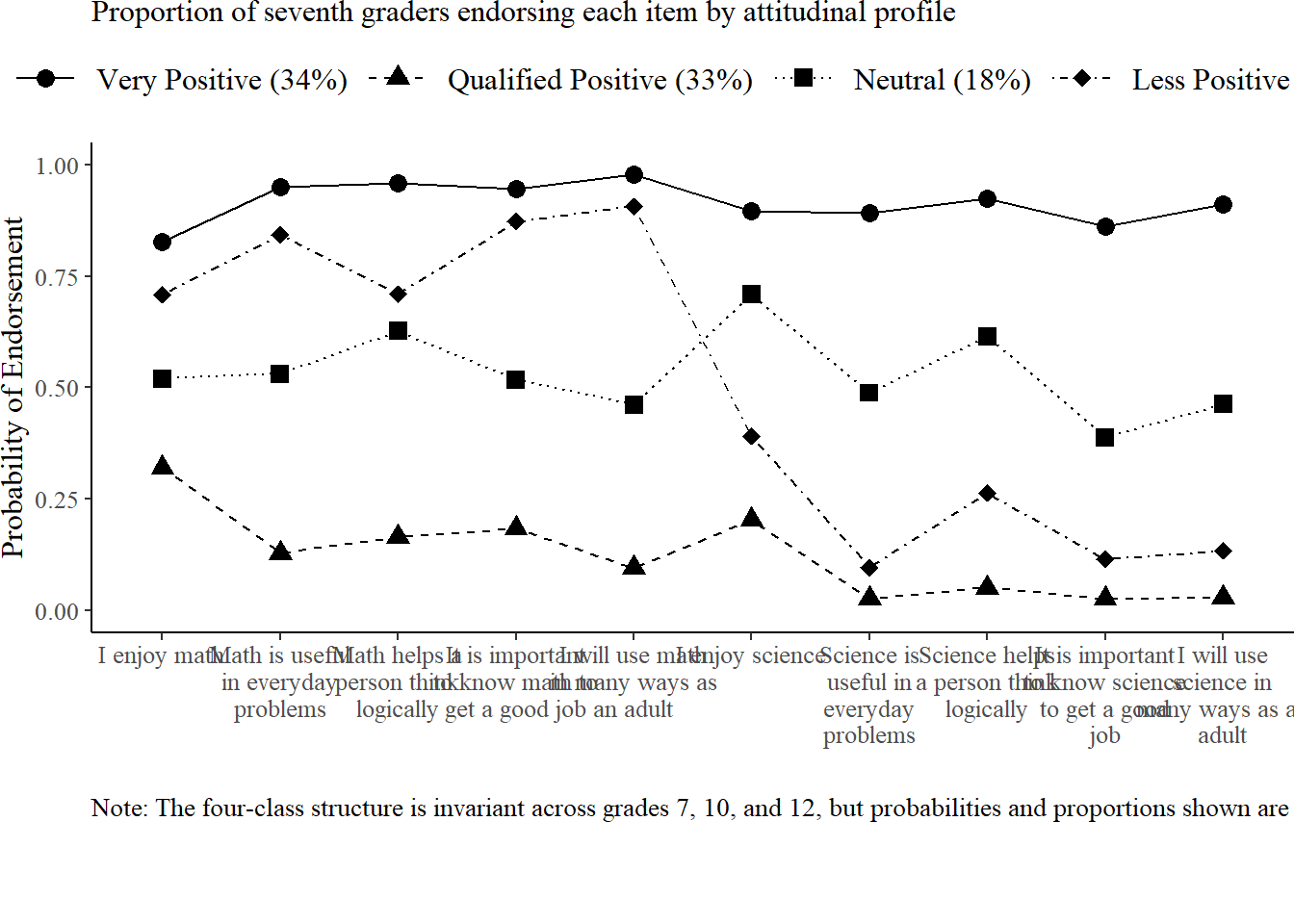

To aid in interpretation, we visualize the conditional response probabilities for the selected four-class solution at each grade. These plots show the probability of endorsing each response category within each latent profile and help clarify how the classes

# Source plot_lca function

source(here("tc_lta","functions", "plot_lca.txt"))

# Define grades and folders

grades <- c("g7", "g10", "g12")

folder_paths <- c(

g7 = here("tc_lta","g7_enum"),

g10 = here("tc_lta","g10_enum"),

g12 = here("tc_lta","g12_enum")

)

# Generate plot for each grade

for (grade in grades) {

# Read 4-class model

model_file <- file.path(folder_paths[grade], paste0("c4_", grade, ".out"))

model <- readModels(model_file, quiet = TRUE)

# Base plot

base_plot <- plot_lca(model_name = model)

# Get class sizes and round to 1 decimal

c_size <- round(model$class_counts$modelEstimated$proportion * 100, 1)

# Customize plot with generic class labels

final_plot <- base_plot +

scale_colour_discrete(labels = c(

glue("Class 1 ({c_size[1]}%)"),

glue("Class 2 ({c_size[2]}%)"),

glue("Class 3 ({c_size[3]}%)"),

glue("Class 4 ({c_size[4]}%)")

)) +

scale_shape_discrete(labels = c(

glue("Class 1 ({c_size[1]}%)"),

glue("Class 2 ({c_size[2]}%)"),

glue("Class 3 ({c_size[3]}%)"),

glue("Class 4 ({c_size[4]}%)")

)) +

scale_linetype_discrete(labels = c(

glue("Class 1 ({c_size[1]}%)"),

glue("Class 2 ({c_size[2]}%)"),

glue("Class 3 ({c_size[3]}%)"),

glue("Class 4 ({c_size[4]}%)")

)) +

labs(title = glue("4-Class LCA Item Probability Plot for Grade {toupper(grade)}"))

# Display plot

print(final_plot)

}

26.8 Conduct step 1: Invariant Latent Transition Analysis

In Step 1 of the latent transition analysis (LTA), we estimate an unconditional longitudinal model with measurement invariance across timepoints. This means that item-response thresholds are constrained to be equal across Grades 7, 10, and 12, allowing us to interpret latent class transitions over time on a consistent measurement scale. This step provides the foundation for the 3-step approach by establishing a stable class structure across waves.

# Define LTA model

lta_model <- mplusObject(

TITLE = "4-Class LTA for G7, G10, G12",

VARIABLE = glue(

"categorical = AB39A AB39H AB39I AB39K AB39L AB39M AB39T AB39U AB39W AB39X

GA32A GA32H GA32I GA32K GA32L GA33A GA33H GA33I GA33K GA33L

KA46A KA46H KA46I KA46K KA46L KA47A KA47H KA47I KA47K KA47L;

usevar = AB39A AB39H AB39I AB39K AB39L AB39M AB39T AB39U AB39W AB39X

GA32A GA32H GA32I GA32K GA32L GA33A GA33H GA33I GA33K GA33L

KA46A KA46H KA46I KA46K KA46L KA47A KA47H KA47I KA47K KA47L

FEMALE MINORITY STEM STEMSUP ENGINEER MATHG8 SCIG8 MATHG11 SCIG11

MATHG7 MATHG10 MATHG12;

auxiliary = FEMALE MINORITY STEM STEMSUP ENGINEER MATHG8 SCIG8 MATHG11 SCIG11

MATHG7 MATHG10 MATHG12;

missing = all(9999);

classes = c1(4) c2(4) c3(4);

auxiliary = stem;"

),

ANALYSIS = "

estimator = mlr;

type = mixture;

starts = 500 10;

processors = 4;",

MODEL = glue(

"%overall%

c2 on c1;

c3 on c2;

MODEL c1:

%c1#1%

[AB39A$1-AB39X$1] (1-10);

%c1#2%

[AB39A$1-AB39X$1] (11-20);

%c1#3%

[AB39A$1-AB39X$1] (21-30);

%c1#4%

[AB39A$1-AB39X$1] (31-40);

MODEL c2:

%c2#1%

[GA32A$1-GA33L$1] (1-10);

%c2#2%

[GA32A$1-GA33L$1] (11-20);

%c2#3%

[GA32A$1-GA33L$1] (21-30);

%c2#4%

[GA32A$1-GA33L$1] (31-40);

MODEL c3:

%c3#1%

[KA46A$1-KA47L$1] (1-10);

%c3#2%

[KA46A$1-KA47L$1] (11-20);

%c3#3%

[KA46A$1-KA47L$1] (21-30);

%c3#4%

[KA46A$1-KA47L$1] (31-40);"

),

OUTPUT = "svalues; sampstat; tech11; tech14;",

SAVEDATA = "

file = lta_4class_cprobs.dat;

save = cprobabilities;

missflag = 9999;",

PLOT = "

type = plot3;

series = AB39A AB39H AB39I AB39K AB39L AB39M AB39T AB39U AB39W AB39X

GA32A GA32H GA32I GA32K GA32L GA33A GA33H GA33I GA33K GA33L

KA46A KA46H KA46I KA46K KA46L KA47A KA47H KA47I KA47K KA47L (*);",

rdata = lsay_data

)

# Run LTA model

lta_fit <- mplusModeler(

lta_model,

dataout = here("tc_lta","lta_enum", "lsay_lta.dat"),

modelout = here("tc_lta","lta_enum", "lta_4class.inp"),

check = TRUE,

run = TRUE,

hashfilename = FALSE

)26.8.1 Plot Invariant Probability Plot

After fitting the invariant LTA model, we examine the conditional response probabilities for each latent class. These plots illustrate how students in each profile tend to respond to the attitudinal items, averaged across timepoints. Because the model assumes measurement invariance, differences between classes can be interpreted consistently across Grades 7, 10, and 12.

# Load LTA model

lta_model <- readModels(here("tc_lta","lta_enum", "lta_4class.out"), quiet = TRUE)

# Define G7 items

g7_items <- c("AB39A", "AB39H", "AB39I", "AB39K", "AB39L", "AB39M", "AB39T", "AB39U", "AB39W", "AB39X")

# Define descriptive item labels (from Table 1 in the paper)

item_labels <- c(

"I enjoy math",

"Math is useful in everyday problems",

"Math helps a person think logically",

"It is important to know math to get a good job",

"I will use math in many ways as an adult",

"I enjoy science",

"Science is useful in everyday problems",

"Science helps a person think logically",

"It is important to know science to get a good job",

"I will use science in many ways as an adult"

)

# Define class names from the paper

class_names <- c(

"Very Positive",

"Qualified Positive",

"Neutral",

"Less Positive"

)

# Inline plot_lta function (with updated title to match Figure 1)

plot_lta <- function(model_name, time_point = "c1", items = NULL) {

probs <- data.frame(model_name$parameters$probability.scale)

pp_plots <- probs %>%

mutate(LatentClass = sub("^", "Class ", LatentClass)) %>%

filter(grepl(paste0("^Class ", toupper(time_point), "#"), LatentClass)) %>%

filter(category == 2) %>%

dplyr::select(est, LatentClass, param)

if (!is.null(items)) {

pp_plots <- pp_plots %>%

filter(param %in% items)

} else {

pp_plots <- pp_plots %>%

filter(param %in% unique(param)[1:10])

}

# Apply class names from the paper

pp_plots <- pp_plots %>%

mutate(LatentClass = case_when(

LatentClass == "Class C1#1" ~ class_names[1],

LatentClass == "Class C1#2" ~ class_names[2],

LatentClass == "Class C1#3" ~ class_names[3],

LatentClass == "Class C1#4" ~ class_names[4],

TRUE ~ LatentClass

))

pp_plots <- pp_plots %>%

pivot_wider(names_from = LatentClass, values_from = est) %>%

relocate(param, .after = last_col())

# Extract class proportions (using Grade 7 proportions from Table 4)

c_size <- data.frame(cs = c(34, 33, 18, 14))

colnames(pp_plots)[1:4] <- paste0(colnames(pp_plots)[1:4], glue(" ({c_size[1:4,]}%)"))

plot_data <- pp_plots %>%

rename("param" = ncol(pp_plots)) %>%

reshape2::melt(id.vars = "param") %>%

mutate(param = fct_inorder(param))

# Apply descriptive item labels

levels(plot_data$param) <- item_labels

# Create the plot with the exact title from Figure 1

p <- plot_data %>%

ggplot(

aes(

x = param,

y = value,

shape = variable,

linetype = variable,

group = variable

)

) +

geom_point(size = 3) +

geom_line() +

scale_shape_manual(values = c(16, 17, 15, 18)) +

scale_linetype_manual(values = c("solid", "dashed", "dotted", "dotdash")) +

scale_color_grey(start = 0.1, end = 0.6) +

scale_x_discrete(labels = function(x) str_wrap(x, width = 15)) +

ylim(0, 1) +

labs(

title = "Proportion of seventh graders endorsing each item by attitudinal profile", # Exact title from Figure 1

x = "",

y = "Probability of Endorsement",

caption = "Note: The four-class structure is invariant across grades 7, 10, and 12, but probabilities and proportions shown are for Grade 7."

) +

theme_classic() +

theme(